Jetson TX2 is NVIDIA's latest board-level product targeted at computer vision, deep learning, and other embedded AI tasks, particularly focused on "at the edge" inference (when a neural network analyzes new data it’s presented with, based on its previous training) (Figure 1). It acts as an upgrade to both the Tegra K1 SoC-based Jetson TK1, covered in InsideDSP in the spring of 2014, and the successor Tegra X1-based Jetson TX1, which BDTI evaluated for deep learning and other computer vision applications last summer. The developer kit, now available in U.S. and Europe markets and elsewhere beginning in April, is priced at $599 ($299 for education customers) (Table 1). The production module, available through worldwide distribution beginning next quarter, will cost $399 (quantity 1,000) (Table 2).

Figure 1. NVIDIA's latest Tegra SoC, based on 64-bit "Denver 2" and ARM Cortex-A57 CPU cores along with a Pascal-generation GPU, will come in both developer kit (top) and production module (bottom) board formats.

|

|

Jetson TK1 |

Jetson TX1 |

Jetson TX2 |

|

SoC |

Tegra K1 |

Tegra X1 |

"Parker Series SoC" |

|

CPU cores |

|

4 ARM Cortex-A57, 4 ARM Cortex-A53 |

2 "Denver 2", 4 ARM Cortex-A57 |

|

GPU core generation |

Kepler (192 cores) |

Maxwell (256 cores) |

Pascal (256 cores) |

|

Memory |

2 GByte 64-bit LPDDR2/3 SDRAM, 14.9 GBytes/sec peak bandwidth |

4 GByte 64-bit LPDDR4 SDRAM, 25.6 GBytes/sec peak bandwidth |

8 GByte 128-bit LPDDR4 SDRAM, 58.4 GBytes/sec peak bandwidth |

|

Storage |

16 GByte eMMC flash memory |

16 GByte eMMC flash memory |

32 GByte eMMC flash memory |

|

Dimensions |

127mm x 127mm |

170mm x 170mm |

170mm x 170mm |

|

Price |

$192 |

$499 ($100 reduction from original) |

$599 ($299 for educators) |

Table 1. Jetson developer kit family comparisons

|

|

Jetson TX1 |

Jetson TX2 |

|

SoC |

Tegra X1 |

"Parker Series SoC" |

|

CPU cores |

4 ARM Cortex-A57, 4 ARM Cortex-A53 |

2 "Denver 2", 4 ARM Cortex-A57 |

|

GPU core generation |

Maxwell (256 cores) |

Pascal (256 cores) |

|

Memory |

4 GByte 64-bit LPDDR4 SDRAM, 25.6 GBytes/sec peak bandwidth |

8 GByte 128-bit LPDDR4 SDRAM, 58.4 GBytes/sec peak bandwidth |

|

Storage |

16 GByte eMMC flash memory |

32 GByte eMMC flash memory |

|

Dimensions |

50mm x 87mm 400-pin (compatible) connector |

|

|

Price |

$299 (quantity 1,000) |

$399 (quantity 1,000) |

Table 2. Jetson production module family comparisons

NVIDIA refers to the application processor on the Jetson TX2 developer kit and production module PCBs as a "Parker Series SoC," although the boards' designation would imply the name "Tegra X2" for the chip (both this and "Tegra P1" commonly appear in other industry references). The application processor's specifications are comparable to those of a SoC which NVIDIA discussed publicly for the first time at the 2016 Consumer Electronics Show, with further disclosure in greater detail at the IEEE Hot Chips conference later that year.

The new Jetson TX2 production module can operate in two modes: "Max-P" for maximum performance and “Max-Q” for maximum energy efficiency. The company claims that in “Max-P” mode the Jetson TX2 delivers up to twice the computational performance of the Jetson TX1 while consuming less than 15W of total module power, while in “Max-Q” mode it is claimed to deliver up to twice the energy efficiency of the Jetson TX1 at less than 7.5W of total module power consumption. In "Max-P" mode, the "Parker Series SoC's" GPU runs at 1122 MHz, while the CPU clusters run at 2 GHz (when either the "Denver 2s" or ARM Cortex-A57s are in use) or 1.4 Ghz (with both clusters running at the same time). In "Max-Q" model, conversely, the GPU runs at 854 MHz and the ARM Cortex-A57 cluster runs at 1.2 GHz; the "Denver 2" CPU cluster is disabled in this case. Whether the cores' clock speed differences in the two modes also correlate to core operating voltage variances, which would further influence power consumption, is unknown.

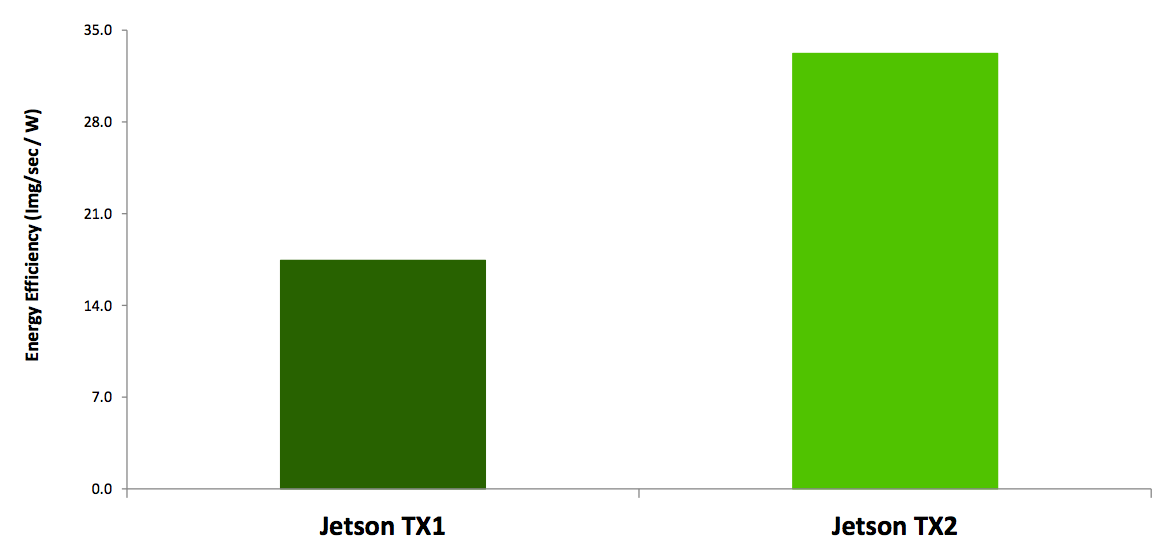

One of the two benchmarks that NVIDIA provided during a recent briefing focuses on GoogLeNet object detection and classification inference, in 16-bit floating-point mode and with a batch size of 128 (Figure 2). Although the Jetson TX1 module achieved slightly higher inference performance than its "Max-Q"-configured Jetson TX2 counterpart—204 images/second vs. 196 images/second—it consumed roughly twice as much power while doing so, meaning that the Jetson TX2 was around twice as energy efficient as the Jetson TX1 in this mode. Presumably when the Jetson TX2 is in "Max-P" mode it will outperform its Jetson TX1 predecessor on this same benchmark, albeit with higher power consumption than when in "Max-Q" mode; NVIDIA did not release benchmark results confirming this hypothesis, however.

Figure 2. When running in its low-power "Max-P" mode, the Jetson TX2 production module delivers comparable GoogLeNet inference performance to the Jetson TX1 predecessor while consuming only around half the power, according to the company.

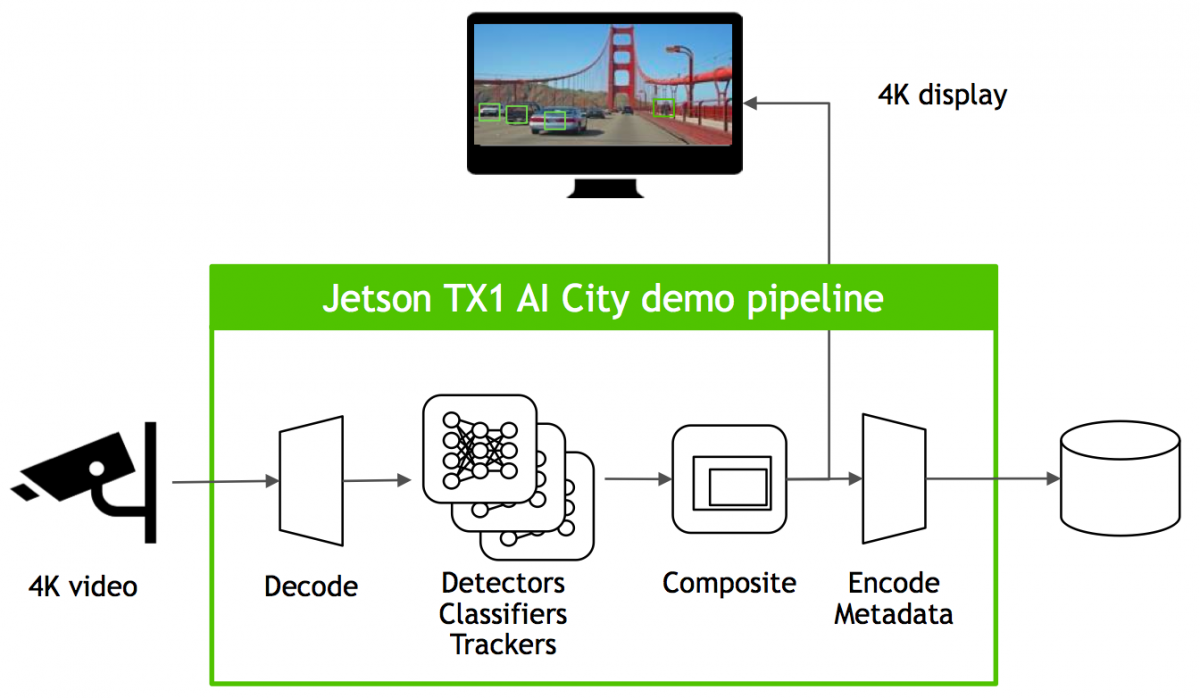

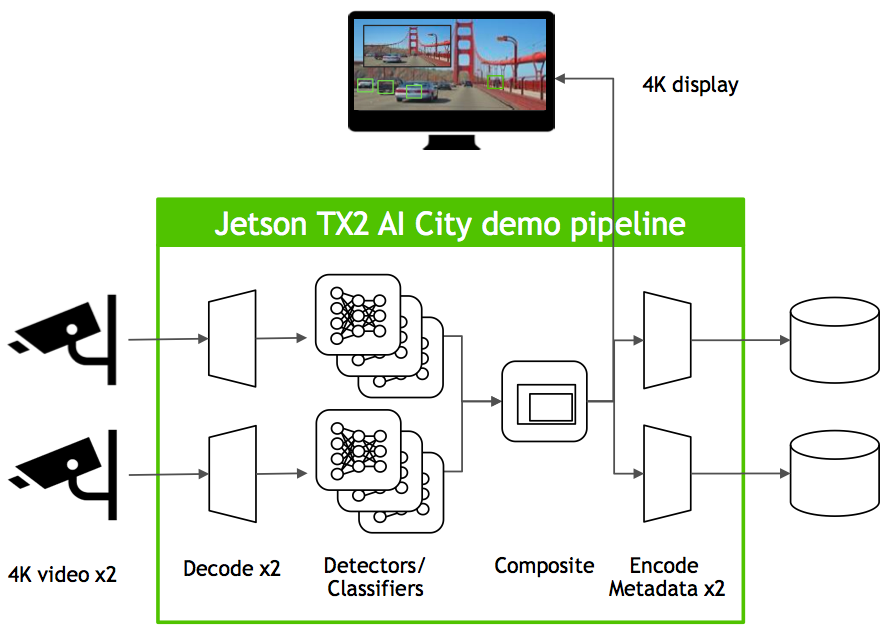

The other benchmark that NVIDIA shared involved decode of one or multiple 4K-resolution (30 fps, H.265-encoded) video streams, followed by the detection, classification and tracking of various objects in those streams, and then the re-encode and display of the metadata-tagged results (Figure 3). The version of the demonstration running on the Jetson TX1, where it consumed 10 W of total module power, encompassed two parallel DNNs (deep neural networks), one dedicated to classifying objects such as vehicles, bicycles and people, and the other further identifying vehicles' types and colors.

Figure 3. A Jetson TX2-based AI demo (top) supports twice the number of 4K-resolution video decodes, detection and classification neural networks, object-tracking capabilities and re-encodes as does its Jetson TX1 predecessor (bottom), says NVIDIA.

The Jetson TX2 module successor, operating in "Max-P" mode and consuming 12W, doubled the number of video decodes and encodes, as well as the number of concurrent DNNs (two each for classification and vehicle type/color identification) and objects capable of being concurrently tracked, according to NVIDIA. However, when asked for absolute numbers of objects capable of being classified and/or tracked, Deependra "Deepu" Talla, Vice President and General Manager of the Tegra product line, declined to comment, indicating that this result was dependent on a number of variables; desired accuracy, the specific environmental conditions under which video footage was captured, etc.

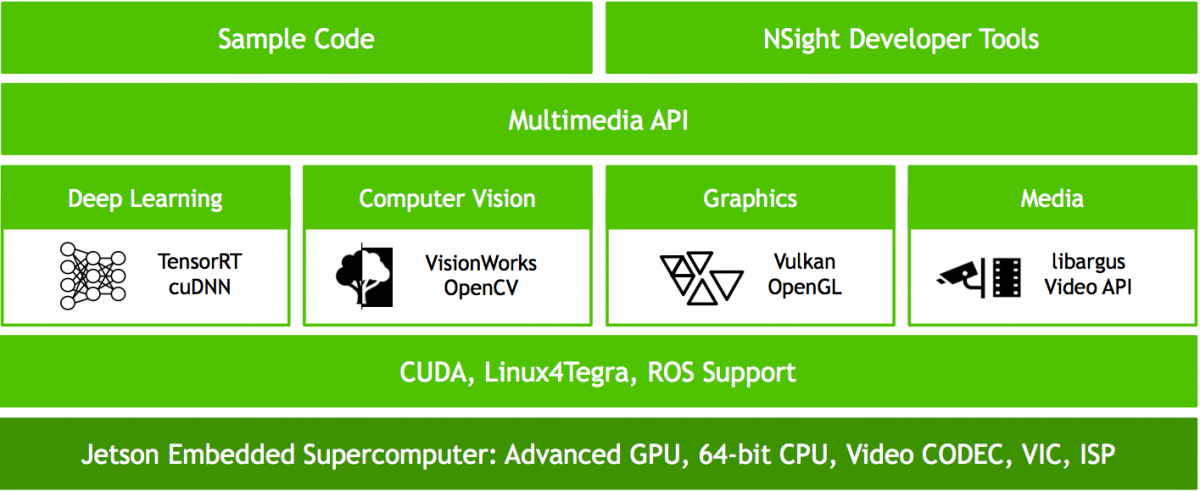

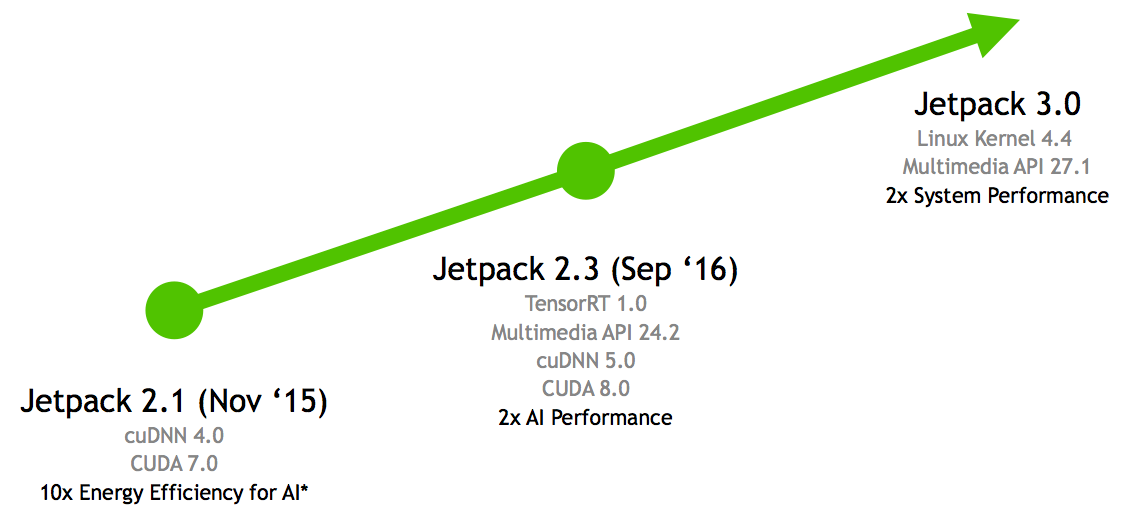

NVIDIA's production module is the company's preferred hardware approach for customers who want to take "Parker Series SoC"-based designs to production, among other reasons because it enables the company to execute ongoing upgrades of its Jetpack SDK based on a known hardware bill-of-materials; Jetpack was also upgraded to v3 coincident with the Jetson TX2 release (Figure 4). For customers interested in implementing custom hardware not based on the TX2 production module, NVIDIA indicates that the company will deal with such situations on a case-by-case basis, but only as an exception; such customers should contact the company's sales team for more details. And a NVIDIA spokesperson also pointed to the Jetson ecosystem page on the company's website; it currently contains entries for several partners who have developed custom Tegra X1-based production modules, implying that such an intermediary path may also be available for the "Parker Series SoC" in the future.

Figure 4. NVIDIA's Jetpack SDK bundles numerous software tools useful in developing computer vision and other applications for Tegra SoCs (top); latest version 3.0 makes advancements in several key SDK elements (bottom).

Add new comment