Last year, when CEVA introduced the initial iteration of its CDNN (CEVA Deep Neural Network) toolset, company officials expressed an aspiration for CDNN to eventually support multiple popular deep learning frameworks. At the time, however, CDNN launched with support only for the well-known Caffe framework, and only for a subset of possible layers and topologies based on it. The recently released second-generation CDNN2 makes notable advancements in all of these areas, including both more fully developed support for Caffe and newly added support for Google's TensorFlow framework, all with a continued focus on optimizing deep learning deployments for resource-constrained embedded systems.

As InsideDSP first described last October, CEVA's toolset consists of two primary components. First is the CEVA Network Generator, a utility that transforms existing pre-trained networks and weight data sets, generated using one of the supported deep learning frameworks, into embedded-ready equivalents (including floating-point to 16-bit fixed-point data conversion whenever feasible). In addition to supporting only the Caffe framework, CDNN1 was additionally limited to linear network topologies (such as those used in AlexNet and VGGnetworks, for example).

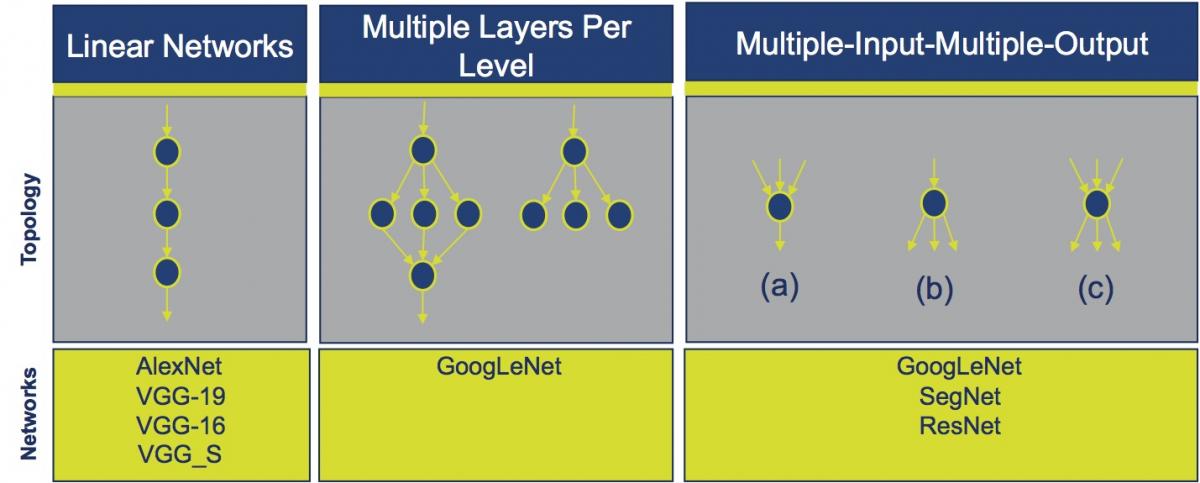

CDNN2 broadens its capabilities to encompass topologies such as multiple layers per level, found in network models like GoogLeNet; MIMO (multiple input/multiple output), as employed by SegNet and ResNet; fully convolutional topologies; and "inception" (i.e. network within a network) structures. And, as previously mentioned, these pre-configured neural network models and their associated weights, along with custom models created from scratch by a particular customer, can now come from both the Caffe and Google TensorFlow frameworks; support for the latter was also showcased in the recent announcement of Movidius' conceptually similar Fathom toolset.

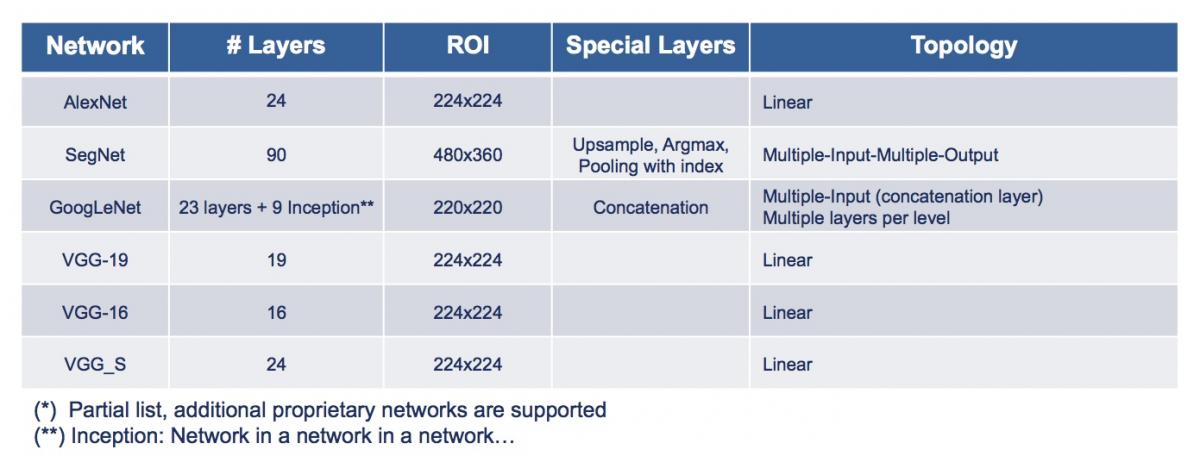

The other key piece of CEVA's CDNN toolset is its set of real-time neural network function libraries, along with accompanying APIs, which enable optimized execution of neural networks on the company's XM4 vision processor core. Here, too, CEVA has expanded the layer types that it supports, now including deconvolution, concatenation, up-sample, argmax and customer-proprietary functions, in addition to the previously supported input manipulation, convolutional, normalization, pooling, fully connected, softmax, and activation layers (Figure 1).

Figure 1. Version 2 of CEVA's CDNN toolset extends to support for multiple network topologies (top) and layer types (bottom).

In its recent briefing with BDTI, CEVA also provided a brief glimpse into its intentions for the upcoming CDNN3. Unsurprisingly, support for additional software frameworks is planned (Theano and Torch are possibilities, for example, along with Microsoft's CNTK), along with the ability to convert floating-point weights and other data structures into a broader range of fixed-point alternatives. CEVA also plans to support next-generation vision processor cores with CDNN3, although company representatives Liran Bar (Director of Product Marketing), Eran Briman (VP of Marketing), and Erez Natan (Imaging and Computer Vision Algorithms Team Leader) were mum on any hardware details, as well as on when CDNN3 might begin shipping.

CDNN2, however, is now available; the video below shows it in action (Video 1). As you'll see, the XM4 vision processor core is currently still running in un-optimized form on a FPGA; SoC-based core implementations from CEVA's licensees should begin to be available by the end of this quarter, according to company representatives.

Video 1. Demonstration of CEVA's CDNN2 deep learning toolkit.

Add new comment