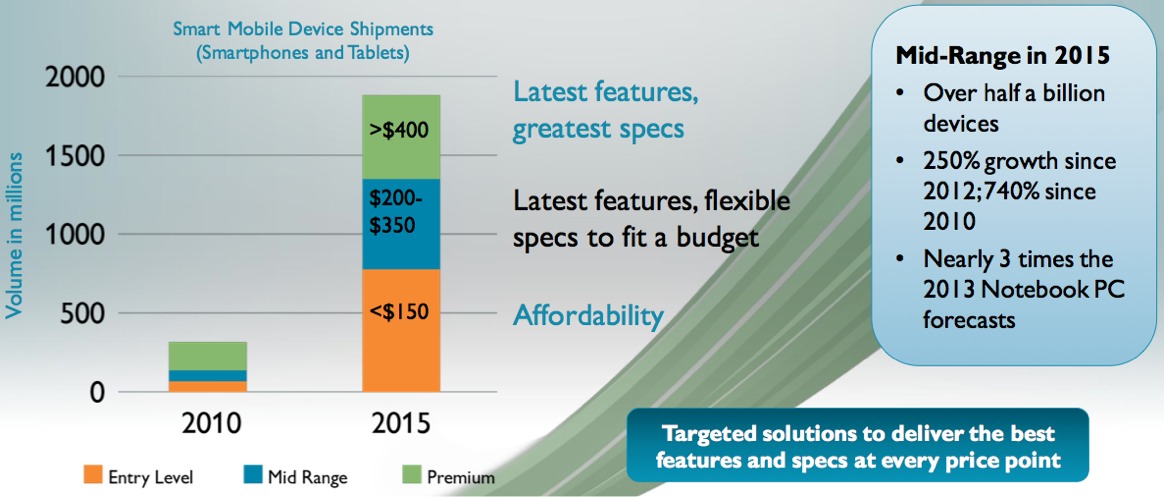

It will likely be news to none of you that the smartphone and tablet market has been on a steep ramp in recent years, and is expected to continue its aggressive growth for the foreseeable future (Figure 1):

Figure 1. ARM forecasts continued vigorous growth for smartphones and tablets over the next few years, and requires a mid-range successor to the venerable Cortex-A9 to both continue to address customers' requirements and to fend off competitive challenges from Intel's Atom and other alternative processor architectures.

This seemingly insatiable demand is fueled by multiple factors: first-time hardware purchasers, upgrades from conventional cellphones to smartphones, and updates from one generation of smartphones and tablets to the next. It will also likely be news to none of you that, in spite of Intel's aggressive efforts to push Atom and other x86 CPUs into mobile electronics designs (see "Smartphone Benchmarks: Caveat Emptor" in this month's edition of InsideDSP), ARM's licensees continue to capture the lion's share of the business.

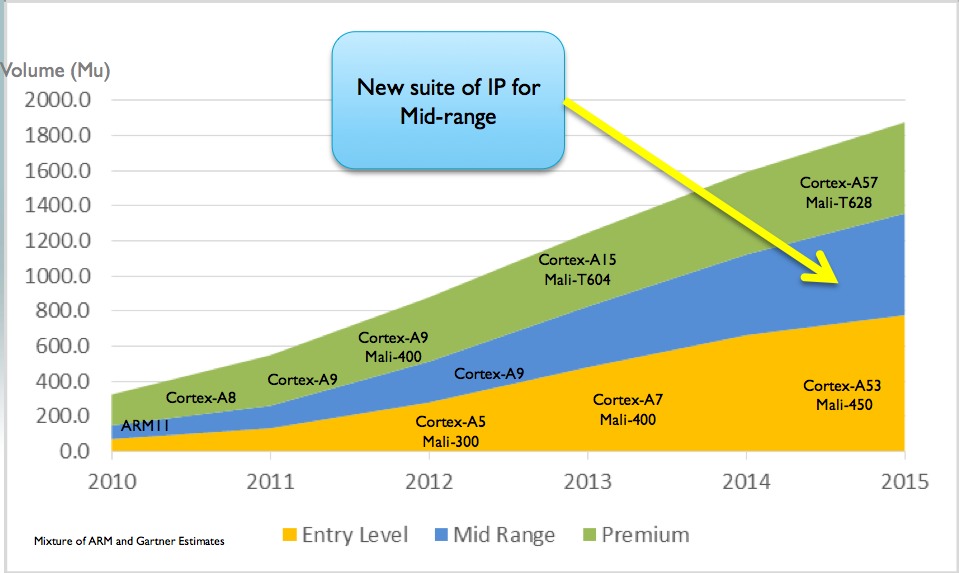

If you peruse ARM's product line evolution in recent years, you'll notice a more rapid rollout of high- and low-end CPU and GPU cores, especially compared to mid-range counterparts (Figure 2).

Figure 2. Whereas the high-end Cortex-A57 and low-end Cortex-A53 are both 64-bit processor cores, ARM has remained true to its 32-bit roots with the mid-range Cortex-A12, whether standalone or paired with the Cortex-A7 in a big.LITTLE multi-core arrangement.

The November 2011 edition of InsideDSP, for example, analyzed ARM's Cortex-A15 core, which took over the high-end 32-bit mantle coincident with the Cortex-A9's move into the product line mid-range. It also covered the low-end Cortex-A7, the successor to the Cortex-A5. While either of the new cores could be used standalone in SoCs, in single-or multi-core arrangements, ARM advocated their joint use in a configuration it referred to using the marketing moniker "big.LITTLE."

Samsung has developed perhaps the most extreme implementation of the concept, combining four Cortex-A7s and four Cortex-A15s in the Exynos 5 Octa SoC, found in the Galaxy S4 handset. To the operating system, the Exynos 5 Octa appears to be a quad-core processor. The Cortex-A7 and Cortex-A15 are ARMv7 instruction set-compatible, with the former demanding lower power consumption but delivering lower performance. The SoC rapidly and automatically switches between one quad-core combination and the other based on the system's processing demands at each point in time.

Subsequent to the unveiling of its first big.LITTLE pairing, in October 2012 to be precise, ARM announced its first ARMv8 architecture CPUs, the Cortex-A53 and Cortex-A57. As with Cortex-A7 and Cortex-A15, these 64-bit successors focus on low power consumption and high performance, respectively, and can either operate standalone or in a big.LITTLE combination. Although we'll need to wait until at least 2014 for ARM's 64-bit CPU cores to begin appearing in licensees' SoCs, they're necessary for ARM to mount a serious challenge to the current x86 dominance in servers.

As InsideDSP noted in December 2012, in discussing Texas Instruments' Cortex-A15-based SoCs for special-purpose servers, "Conventional servers typically use 64-bit AMD and Intel CPUs, running 64-bit operating systems (Windows, Linux/Unix, and Mac OS X) and applications. The transition from the x86 to the ARM instruction set will be challenging enough for system developers and users alike. A further downgrade from 64-bit to 32-bit processing may be an unacceptable tradeoff, even considering the power consumption savings."

More generally, 64-bit support is a prerequisite to enabling the ARM ecosystem to pursue conventional computer form factors such as desktops and laptops. You might think, therefore, that a mid-range successor to the Cortex-A9 would—like its high-end Cortex-A57 and low-end Cortex-A53 counterparts—also be ARMv8-based and 64-bit cognizant. You might think so...but you'd be wrong.

In early June, ARM unveiled the Cortex-A12 CPU core, along with the Mali-T622 GPU core and Mali-V500 video processing core. The need for a mid-range next-generation processor is quantified by the market volume opportunity noted in Figure 1, along with the pricing assumptions it also documents. The comparative estimated die size of the Cortex-A12 versus the Cortex-A57 and Cortex-A53 is therefore critical to addressing system developers’ bill-of-materials cost expectations, while maintaining SoC suppliers' profit expectations (Figure 3).

Figure 3. The comparative die sizes of the Cortex-A57 (top), Cortex-A12 (middle) and Cortex-A53 (bottom), along with system developers' mid-range bill-of-material cost expectations, motivated the new core's development.

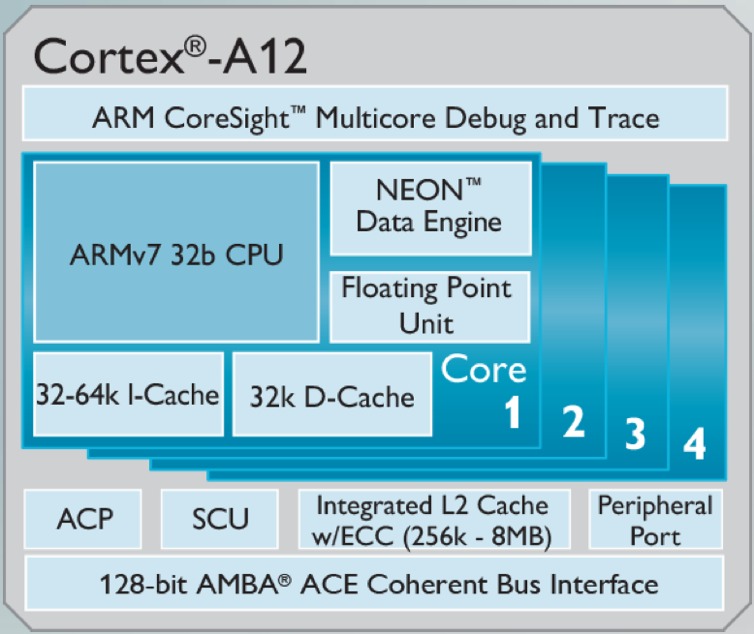

As its name implies, the Cortex-A12 is not a 64-bit processor, but is instead slotted in-between the Cortex-A9 and Cortex-A15 in terms of features and performance. Like the Cortex-A15 and Cortex-A7, but unlike the Cortex-A9, it supports LPAE (Large Physical Address Extension) 40-bit memory addressing. And like the Cortex-A15, it's intended for optional big.LITTLE partnering with the Cortex-A7 on the same SoC, since all three cores implement the same 128-bit AMBA ACE bus interface scheme.

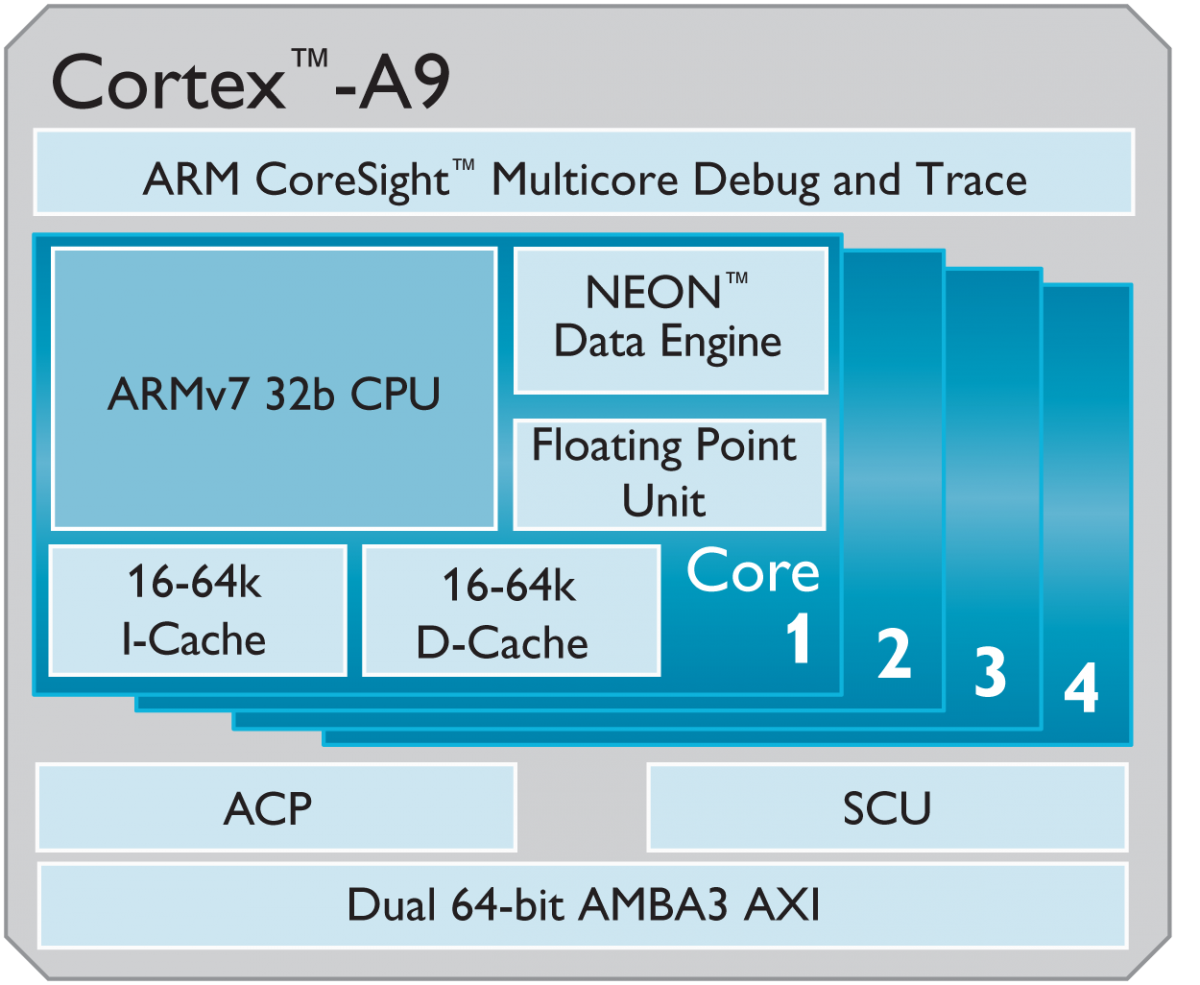

How does the Cortex-A12 build on the Cortex-A9 foundation? Only some of the Cortex-A12 architecture details are public at this point; ARM doesn't anticipate that SoCs based on the core will begin sampling for at least a year. But here's what we know so far:

- Befitting the higher transistor budget available on modern semiconductor processes, the NEON 128-bit SIMD extension unit is required on the Cortex-A12, whereas it is optional (albeit commonly implemented) on the Cortex-A9.

- Whereas the NEON and floating-point units were in-order on the Cortex-A9, they're out-of-order on the Cortex-A12, making the newer CPU a fully out-of-order design.

- The Cortex-A9 pipeline depth was 8 stages; on the Cortex-A12, it's 11 stages (and on the Cortex-A15, it's 15 stages). This pipeline depth extension allows for higher clock rates on a common semiconductor process foundation, albeit with a potentially increased probability of performance degradation due to branch misprediction penalties. As the comparative block diagrams reveal, however, the minimum-size instruction and data caches on the Cortex-A12 are twice as large as those on the Cortex-A9, which will help mitigate the impact of such mispredictions (Figure 4).

Figure 4. Evolutionary improvements from the Cortex-A9 (top) to the Cortex-A12 (bottom) include a deeper pipeline, the guaranteed presence of the NEON 128-bit SIMD "engine," full out-of-order support, and larger minimum instruction and data cache sizes.

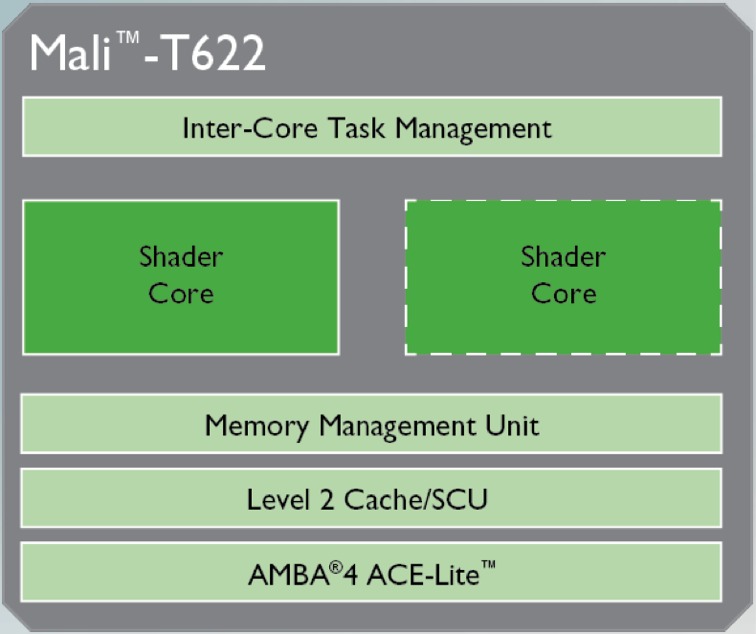

In aggregate, company officials estimate that these enhancements will enable the Cortex-A12 to deliver a 40 percent performance uptick versus the Cortex-A9 on the same process node, with equivalent energy efficiency. ARM isn't solely a CPU supplier, of course, so it's perhaps not a surprise to hear that the company took advantage of the early-June 2013 press event to also announce a few other cores. First, there's the Mali-T622 graphics core (Figure 5).

Figure 5. The Mali-T622 GPU, befitting its mainstream intent, is a two-shader variant of the earlier announced eight-shader Mali-T628.

It's a dual-shader variant of the Mali-T628, unveiled almost one year ago as an initial member of the second generation Mali-T600 architecture. Each shader core includes two ALUs, an LSU (local storage unit) and a texture unit, and the GPU supports both OpenGL ES 3.0 and OpenCL 1.1 Full Profile.

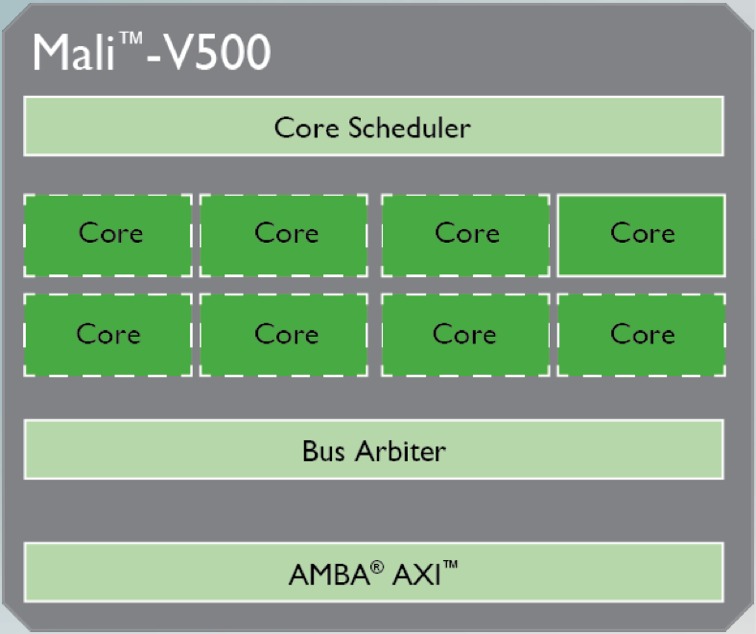

Also announced in early June was the first entrant in a new ARM product line, the Mali-V500 video processing core, which ARM's website refers to as "the foundational product of the upcoming ARM Mali Video series" (Figure 6).

Figure 6. ARM's Mali-V500, the first of a planned multi-member video processor core family, offloads imaging operations from other SoC processing resources.

As with a DSP core such as Qualcomm's Hexagon, the intent with the Mali-V500 is to offload the CPU and GPU for energy-efficient processing of multimedia functions. The focus in this case, however, is narrowly directed at photo and video encoding, decoding and other similar image-related functions.

To clarify, the Mali-V500 is not intended for embedded vision processing (e.g., face recognition, gesture interfaces, and augmented reality), as are cores such as CEVA's MM3101, Tensilica's IVP, or the PVP (pipelined vision processor) inside some of Analog Devices' latest Blackfin SoCs. And whereas the Mali-V500 supports a wide range of image and video formats, including H.264 and WebM (Google's rename of the VP8 codec it obtained when it purchased On2 Technologies several years ago), it comprehends neither the recently-finalized VP9 nor H.264's successor, H.265—also known as HEVC (High Efficiency Video Coding). These and other leading edge codecs can, of course, alternately be implemented on the SoC's CPU and GPU.

ARM's decision to stay the 32-bit course with the Cortex-A12 core is a pragmatic recognition that whereas 64-bit operating systems are currently widespread in conventional computing platforms, 32-bit OSs remain dominant in smartphones, tablets and other mobile electronics devices, and will continue to do so for some time. The Cortex-A12 appears to be an able successor to the wildly popular Cortex-A9, which dates from 2010. Whether implemented standalone or paired with the in-order Cortex-A7 in SoCs willing to trade higher transistor counts for a lower average power consumption profile, the Cortex-A12 is seemingly poised to power the next generation of mainstream mobile electronics system designs. And the Mali-T600 GPU and Mali-V500 video processor complete the picture, both figuratively and literally.

Add new comment