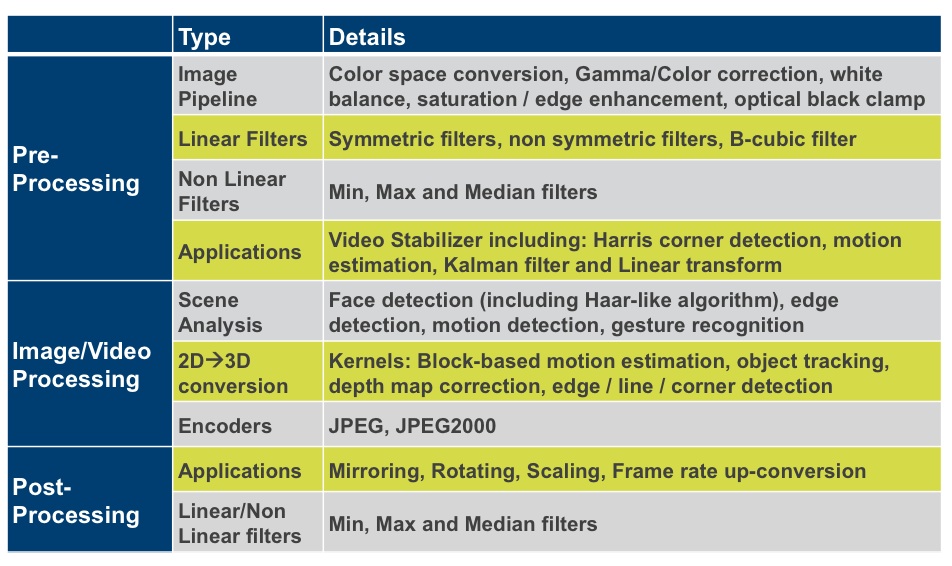

In January 2013, InsideDSP covered the CEVA-MM3101, the company's first DSP core targeted not only at still and video image encoding and decoding tasks (akin to the prior-generation MM2000 and MM3000) but also at a variety of image and vision processing tasks. At that time, the company published the following table of MM3101 functions that it provides to its licensees (Table 1):

Table 1. The initial extensive software function library unveiled in conjunction with the CEVA-MM3101 introduction is further expanded by CEVA's super-resolution algorithm.

Company officials indicated that CEVA would steadily increase the library of functions going forward. And in April 2013, the company followed through on its promise, publicly releasing details on its software-based super-resolution algorithm. Although the above table indicates that image scaling was already supported by the MM3101, that particular scaling algorithm works on single frames and uses conventional techniques: blending pixels together to down-scale a source image and interpolating between them for up-scaling purposes.

Super-resolution represents an entirely different approach, operating on multiple frames. It processes two to six sequentially snapped images; more images generally deliver higher-quality results, while three to five images provide the "ideal" source set from a quality-versus-performance standpoint, according to company representatives. Ideally, there should be no motion of any objects in the images from one frame to the next, although the algorithm does support "ghost removal" to deal with modest levels of such artifacts.

Super-resolution was developed to address consumers' aspirations to capture DSLR (digital single lens reflex) camera-like images from smartphones, tablets and other consumer electronics devices, including the desire for high-quality digital zoom capabilities. These latter systems tend to use smaller-dimension image sensors than dedicated cameras, both to reduce bill-of-materials costs and in response to the shrunken lens and lens-to-sensor spacing dimensions found in such designs. High image sensor pixel counts are therefore less desirable in smartphones and tablets than in cameras, because small sensor dimensions translate to small pixel dimensions, resulting in egregious noise and other low-light performance problems.

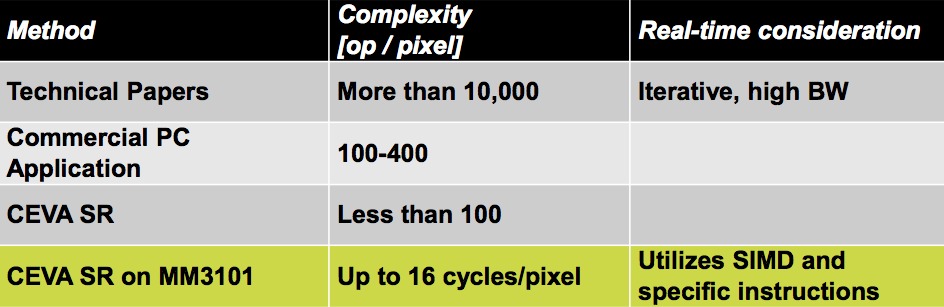

Alternative inter-frame up-scaling techniques sourced from academic research can produce pleasing results, according to CEVA, but consume prodigious amounts of processing horsepower (and power) and still run slowly. As a result, the company's design target with super-resolution was that its algorithm must run in real-time with low power consumption, as well as require low memory bandwidth. Fortunately, in actualizing these aspirations, it had the MM3101 available to act as a co-processor for the primary system CPU.

Super-resolution is capable of boosting resolution up to 4x (2x each in the horizontal and vertical axes) as compared to the resolution of the source images. The table that follows compares CEVA's inter-frame up-scaling algorithm (both running on a CPU and leveraging the MM3101) to academic-sourced inter-frame alternatives, along with commercial intra-frame interpolation software. The MM3101-based super-resolution algorithm runs in "a fraction of a second" when transforming four 5 Mpixel source images into a 20-Mpixel output, according to CEVA, while consuming less than 30 mW of power (Table 2).

Table 2. Performance, power consumption and memory bandwidth optimizations, notably assisted by MM3101 DSP hardware acceleration, enable CEVA's super-resolution algorithm to speedily upscale four 5 Mpixel source images to a 20 Mpixel output.

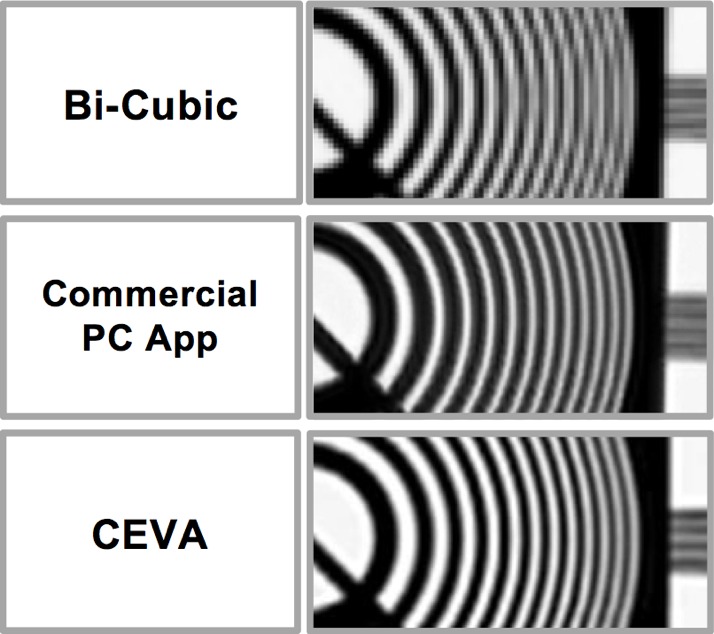

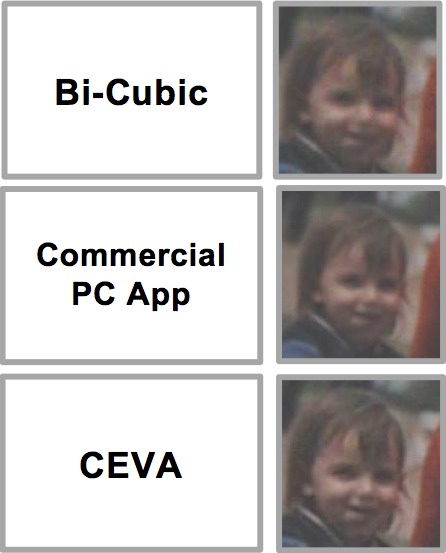

And CEVA-provided images are, according to the company, representative of the quality delivered by the company's inter-frame up-scaling approach as compared to both conventional and proprietary intra-frame alternatives (Figure 1).

Figure 3. CEVA asserts that its super-resolution algorithm not only runs quickly and is stingy with its current requirements, but also produces high-quality results. As proof, the company offers several examples, using test images from LCAV (the Audiovisual Communications Laboratory) (above) and a photograph taken with a Canon EOS 550D DSLR (below).

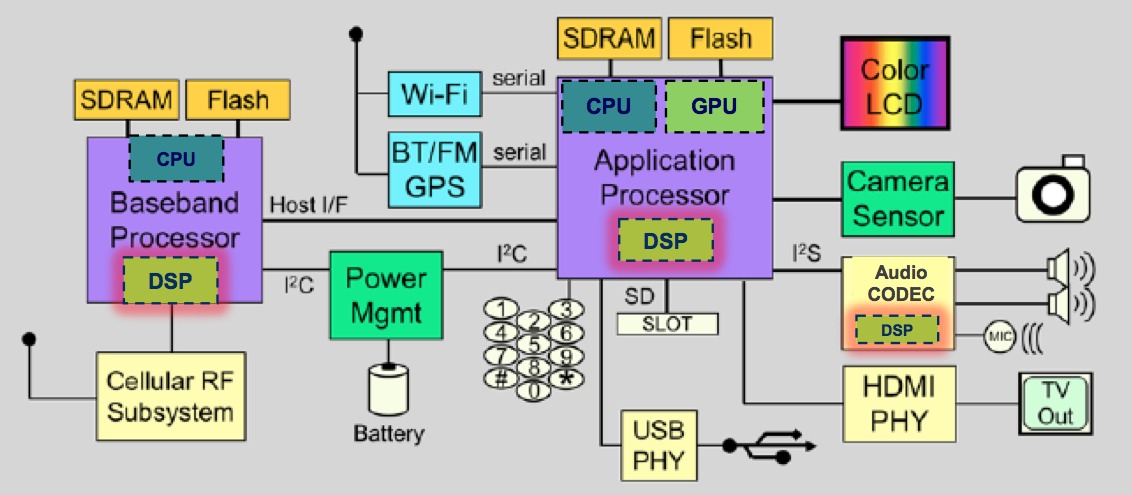

For more information on CEVA's super-sampling algorithm, please see a detailed technical presentation video on the Embedded Vision Alliance website, from the April 2013 Embedded Vision Summit (site registration and login are required prior to access). CEVA's data suggests that multimedia algorithms such as super-sampling can deliver compelling results for applications that employ them. As Figure 2 indicates, however, these results are heavily dependent on applications' abilities to tap into any software libraries that may exist in the system, along with harnessing the associated audio, still and video image, vision and other DSP resources that may be integrated within the system's SoC.

Unfortunately, according to CEVA, such hardware acceleration support does not currently exist in the Android operating system, beyond that delivered by APIs such as Canvas and OpenGL ES that leverage the GPU. Broader Android multimedia frameworks such as OpenCore and StageFright do exist, to tap into the operating system's built-in series of codecs (for example), but they exclusively leverage the system CPU. And circumventing the operating system layer by directly accessing the underlying software libraries and associated silicon resources is an unappealing kludge, because it results in hardware- and O/S version-specific code. CEVA's AMF (Android Multimedia Framework), also unveiled by the company in April 2013, strives to surmount this obstacle, albeit in a (currently) proprietary fashion (Figure 2).

Figure 2. The multimedia DSP resources increasingly found on application processors aren't yet accessible by Android applications in a SoC supplier-independent fashion, leading to the emergence of proprietary alternative approaches such as CEVA's AMF (Android Multimedia Framework).

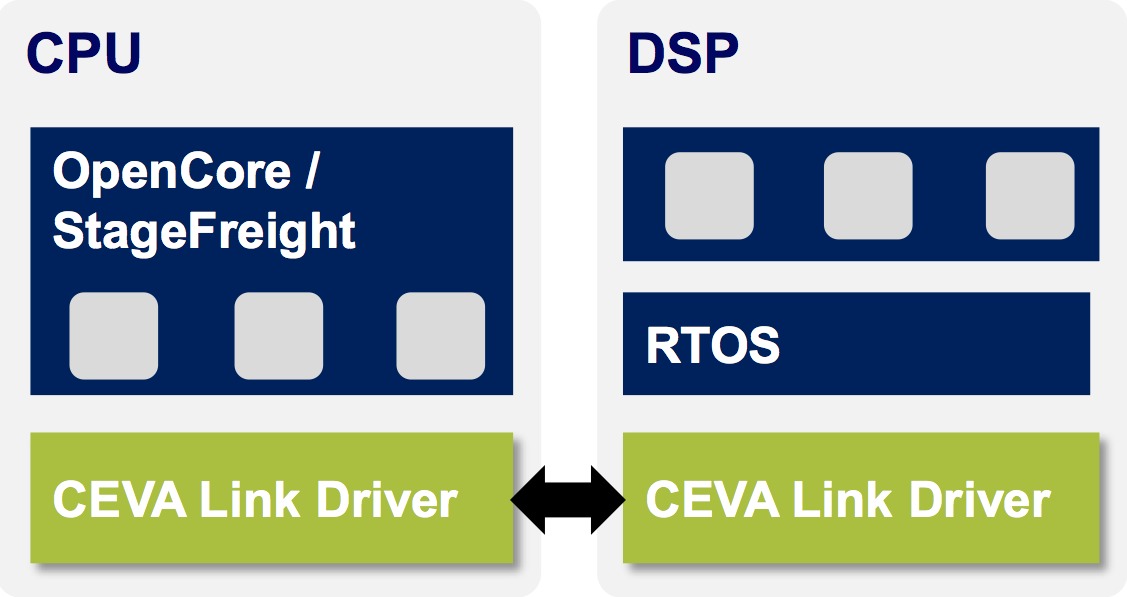

AMF operates in conjunction with OpenCore and StageFright, and compartmentalizes media components using Khronos' OpenGL IL (Integration Layer) API. Multimedia tasks are abstracted on the CPU and executed on the DSP, which could be an imaging and vision core such as the MM3101 or an audio core such as TeakLite-4, which InsideDSP covered in April 2012 (Figure 3).

Figure 3. CEVA's AMF "tunnels" multimedia components using OpenGL IL "wrappers," with the handshake between the CPU and DSP core implemented via CEVA-specific link code both in the Android O/S and within the DSP's RTOS.

According to CEVA, "tunneling" the multimedia task hand-off process to the DSP(s) using OpenGL IL minimizes data transfer overhead at the CPU. The communication between the host CPU and DSP(s) is implemented through shared-memory mailboxes, via a handshake between link driver code embedded in both the Android O/S and in the DSP RTOS (real-time operating system). The AMF approach is cognizant of CEVA's PSU (power scaling unit) technology to reduce power consumption, and supports various IPC (inter-process communication) layers: control-only (i.e., a simple command-response approach), data and control, and task control.

AMF is the latest in a series of DSP-enabling proprietary framework approaches from Android-supportive SoC suppliers such as Intel, NVIDIA, Texas Instruments and Qualcomm (for more, see the companion QDSP6 v5 coverage in this edition of InsideDSP). Encouragingly, CEVA representatives indicate that Google is currently working on supplier-independent DSP resource support in a future version of Android, due for release in the 6-to-9 month timeframe. But for now, it seems, Android application developers will need to rely on silicon vendor-proprietary schemes. And the supplier-independent DSP support situation unfortunately isn't currently any better for other mobile operating systems; CEVA plans a similar framework for Microsoft's Windows Phone O/S, for example.

Add new comment