For decades, multiprocessor systems were rare and most developers never had to think about how to program them. This began to change roughly a decade ago. In the spring of 2005, both AMD (Athlon 64 X2) and Intel ("Smithfield") unveiled x86 CPUs with dual processor cores on a single die. More recently, not only has the number of both physical and virtual on-chip cores steadily grown, the multi-core trend has also expanded into mobile and embedded applications. As multiprocessor chips become more prevalent, the ability to program them efficiently has become more important.

BDTI recently spoke with Doug Norton, Vice President of Sales and Marketing at Texas Multicore Technologies (TMT) and Lawrence Waugh, co-founder and COO at Calavista Software (a TMT partner), about multi-core software efficiency, in an in-depth briefing spawned from an earlier brief meeting at the AMD Embedded Developers Conference. Norton and Waugh began by quoting from a 2008 article in Dr. Dobbs Journal, which offered eight "simple" rules for developing efficiently threaded applications:

- Be sure you identify truly independent computations.

- Implement concurrency at the highest level possible.

- Plan early for scalability to take advantage of increasing numbers of cores.

- Make use of thread-safe libraries wherever possible.

- Use the right threading model.

- Never assume a particular order of execution.

- Use thread-local storage whenever possible; associate locks to specific data, if needed.

- Don’t be afraid to change the algorithm for a better chance of concurrency.

To the above list, Norton and Waugh added one more rule, which they numbered "0". "Hire a team of PhD’s who are experts in computer architecture," they suggested, underscoring their view of the daunting challenge that multiprocessor programming represents for many application developers. And if you suspect that they have a proposed solution to the problem, you'd be right. It consists of the SequenceL programming language, based on two decades of research by Texas Tech University in partnership with NASA, and an accompanying toolset consisting of integrated development environment (IDE) plug-ins, a debugger, an interpreter, and auto-parallelizing compiler, and a runtime environment. TMT was formed in 2009 to commercialize SequenceL technology, obtaining an exclusive license from Texas Tech; the core technology inventors at Texas Tech are also principals at TMT.

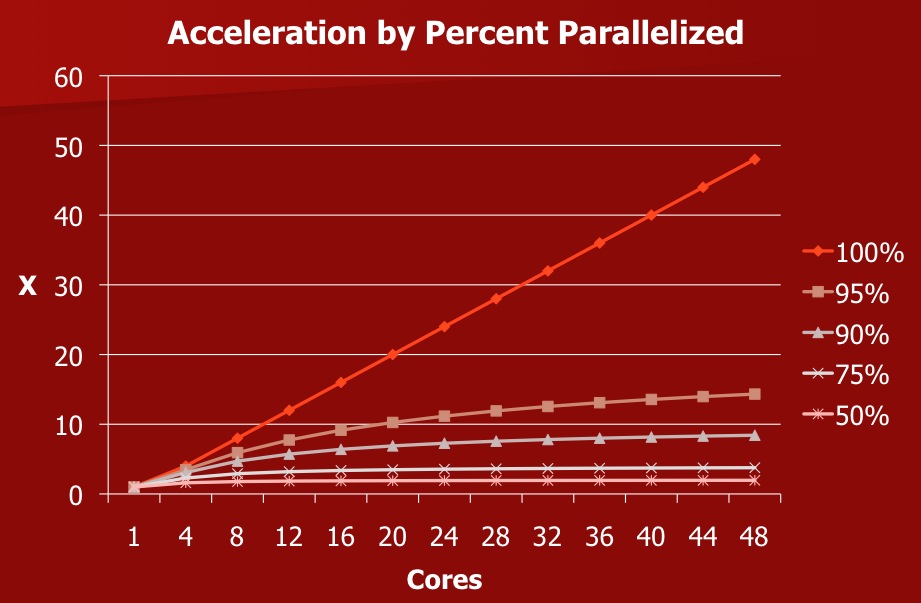

Other automated solutions exist, such as the Grand Central Dispatch technology originally developed at Apple and later open-sourced, that attempt to extract parallelization in the process of compilation. But, according to Norton and Waugh, they only solve part of the problem (and then only partially) because they retroactively focus on extracting multi-threaded capabilities from code that may be fundamentally sequential in nature. As such, the degree of parallelism that can be obtained is limited, as is the resultant speed improvement regardless of the number of processing cores available (Figure 1).

Figure 1. Unless you're able to make your code highly parallel, says Texas Multicore Technologies, your speedup percentage results will be capped (and modest) no matter how many processing cores you devote to the problem.

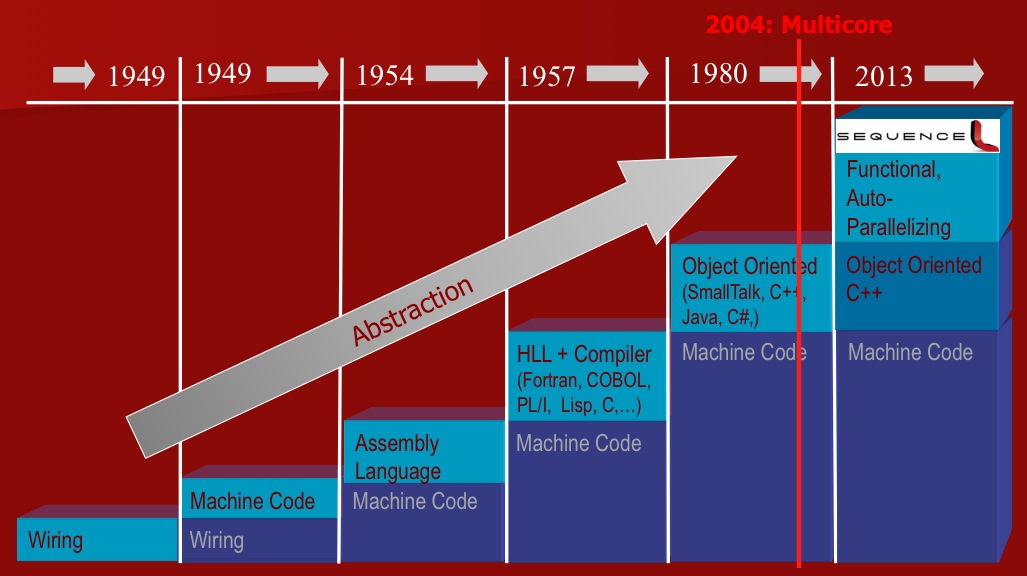

The results are limited, so say Norton and Waugh, unless you go beyond the thread-specific rules 5 and 7 in the above list and, specifically quoting rule 2, "Implement concurrency at the highest level possible." That's where SequenceL, which they view as the logical next evolutionary step in raising the level of programming abstraction, comes in (Figure 2). Although its syntax is unique, its compiler does output C++, so you can optionally focus your SequenceL development efforts only on the most inherently parallelizable functions, merging the resultant modules with the rest of your code written in a more conventional language. And, if you intend for your system to harness additional hardware acceleration by means of a GPU, FPGA or other more special-purpose processor, you're in luck; the SequenceL compiler will optionally also output OpenCL code.

Figure 2. SequenceL is, according to its advocates, a logical evolutionary progression in programmable language abstraction, intended to address the increased parallelization needs of multi-core CPUs.

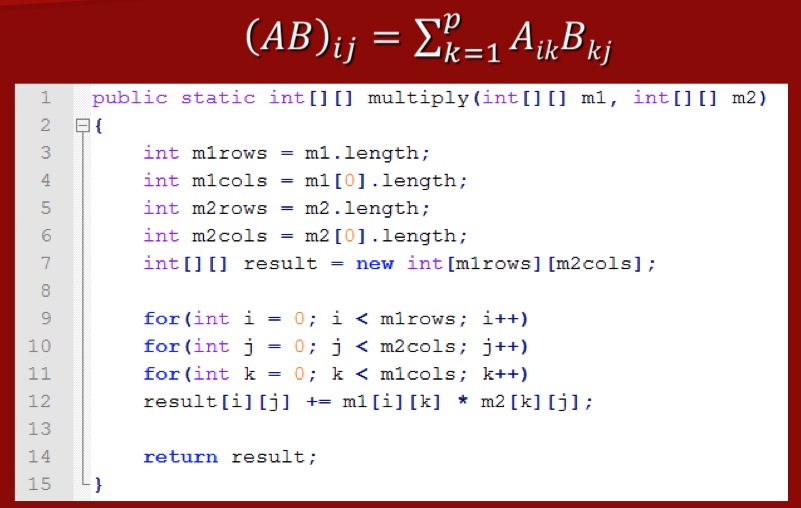

Norton and Waugh provided a detailed case study of SequenceL's claimed capabilities, an m-by-n matrix denoted AB, which is the multiplied product of an m-by-p matrix A and a p-by-n matrix B. Figure 3 shows Java code that implements the matrix multiply function.

Figure 3. A matrix multiplication function implemented in Java results in source code that's difficult to write and read.

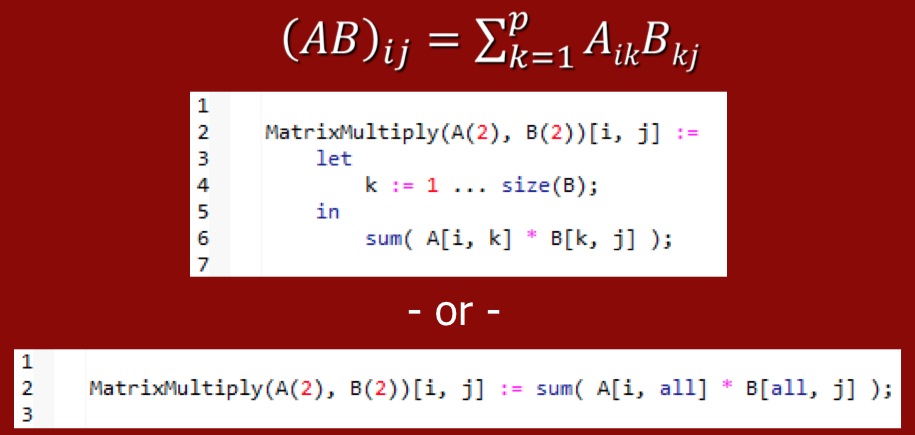

Figure 4 presents that same function as more compactly implemented in SequenceL.

Figure 4. That same matrix multiplication coded in SequenceL is much more compact and, its developers claim, easier for programmers to understand.

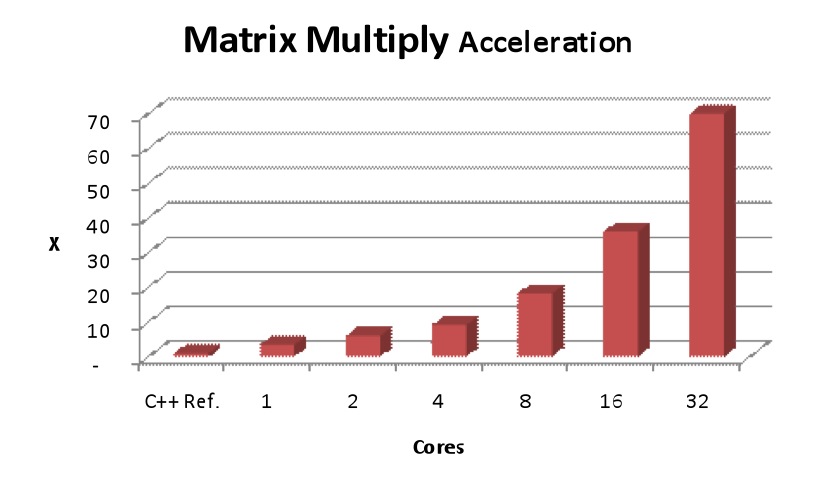

And how do SequenceL performance results on matrix multiplication compare to those derived from a more mainstream programming language, such as sequentially coded (i.e. worst case, from a parallelization standpoint) C++? Quite well, if TMT's claims are to be believed (Figure 5).

Figure 5. Matrix multiplication performance, says Texas Multicore Technologies, scales smoothly with core count when coded in SequenceL.

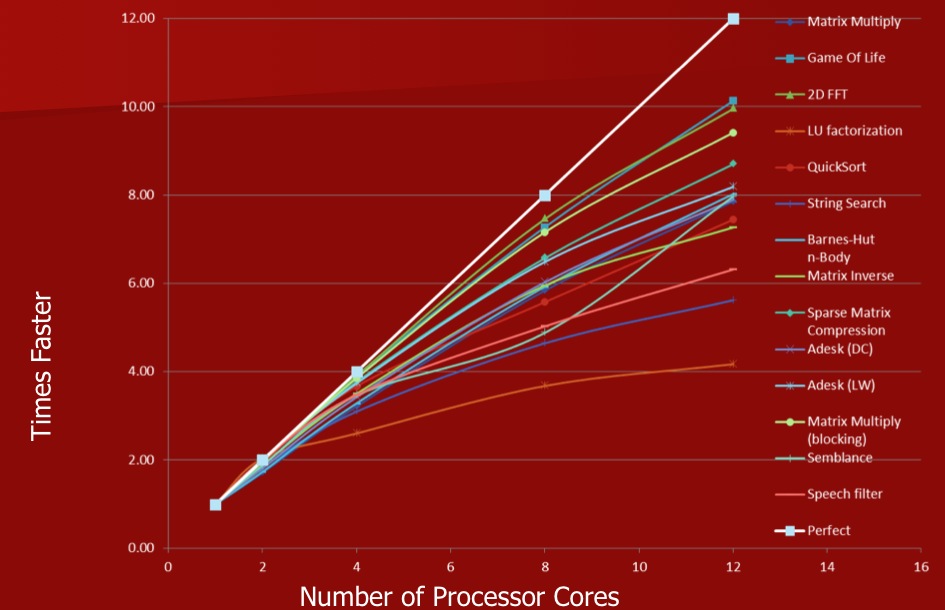

Norton and Waugh also provided data indicating the degree of speedup possible with SequenceL, proportional to core count and across a variety of particularly parallel processing-friendly algorithms (Figure 6). The "Game of Life" that they reference, for those of you who may not already be familiar with it, is not the Milton Bradley-marketed board game. Instead, it's a mathematical algorithm exercise, which Wikipedia defines as "a cellular automaton devised by the British mathematician John Horton Conway in 1970. The "game" is a zero-player game, meaning that its evolution is determined by its initial state, requiring no further input. One interacts with the Game of Life by creating an initial configuration and observing how it evolves or, for advanced players, by creating patterns with particular properties."

Figure 6. Other common algorithms can also respond well to SequenceL's parallelization efforts.

There’s no doubt that mainstream programming languages are not great for writing parallel programs. Sequential languages like C and C++ make it difficult for compilers to find parallelization opportunities in code. Programming languages designed to expose parallelism, like SequenceL, are a much approach from a technical perspective. But convincing developers to adopt a new programming language is very challenging. And convincing engineering managers to adopt a language that is controlled by a single small company will also be a real challenge for TMT. Ultimately the success of SequenceL may require that TMT enlist larger partners – such as chip and tool suppliers – to more fully support it than is currently the case.

Add new comment