In late January of this year, Movidius and Google broadened their collaboration plans, which had begun with 2014's Project Tango prototype depth-sensing smartphone. As initially announced, the companies’ broader intention to "accelerate the adoption of deep learning within mobile devices" was somewhat vague. However, as of earlier this month, at least some of the details of the planned collaboration become clearer, thanks to the unveiling of Movidius' Fathom Software Framework and Neural Compute Stick (Video 1).

Video 1. Movidius shares its vision for the Fathom deep learning platform in this introductory clip.

Hardware details first: the Neural Compute Stick is a USB 3 module based on the MA2450 variant of Movidius' Myriad 2 VPU (visual processing unit) family, and also containing 512 Mbytes of LPDDR3 SDRAM (Figure 1). The company claims that the Neural Compute Stick's peak performance when running convolutional neural network tasks ranges from 80 to 150 GFLOPS, depending on the network complexity and required precision, while consuming less than 1.2 watts of peak power. Note that the Neural Compute Stick is backwards compatible with USB 1.1- and USB 2-based systems, albeit with unspecified reduced performance resulting from the decreased interface bandwidth.

Figure 1. Movidius' new Fathom Neural Compute Stick, based on the company's Myriad 2 VPU (vision processing unit), also includes 512MB of SDRAM and other circuitry within a USB 3-interface peripheral intended for straightforward deep learning development and prototyping (note that the final product will not come in see-through packaging).

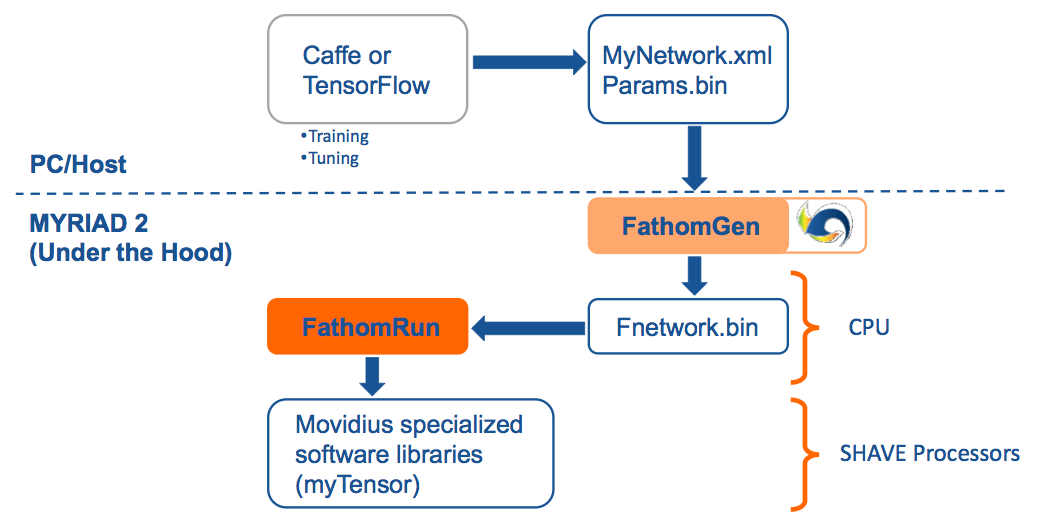

What about the Google collaboration angle? For the answer to that question, turn your attention to the software side of Movidius’ story. At its core, the Fathom Software Framework is a utility that transforms existing pre-trained neural networks, generated by deep learning frameworks such as Caffe and Google's TensorFlow, into equivalents ready for execution on Myriad 2. The intention here is that after developing, training, and assessing the accuracy of a base neural network model on an Ubuntu Linux-based workstation, you can migrate the model to a Myriad 2-optimized equivalent for execution on the Neural Compute Stick, iterating the prototyping and optimization until you achieve desired speed and accuracy results on the Movidius-tailored model version.

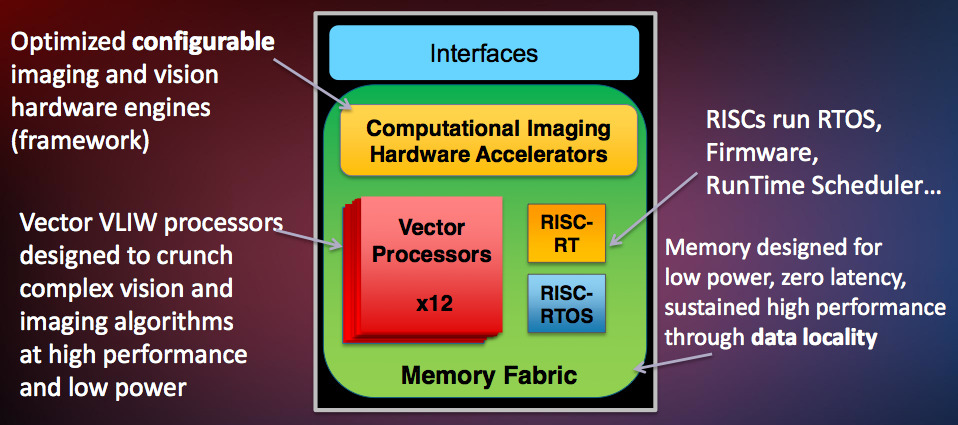

With respect to real-time object recognition and other deep learning tasks using the Fathom Compute Stick, Movidius has focused its near-term attention Google's TensorFlow (with future support for other frameworks to be considered, based on customer requests). As the company's presentation at the IEEE 2014 Hot Chips Symposium (PDF) reveals, the Myriad 2 SoC contains not only multiple vector processors and image processing acceleration blocks, but also several RISC CPU cores capable of running real-time operating system stubs, firmware, and other code (Figure 2).

Figure 2. The Myriad 2 SoC's integrated RISC CPU cores and vector processors (top) find use in executing embedded portions of partner Google's TensorFlow deep learning software library (bottom). Movidius' Fathom Software Framework also supports the popular Caffe framework for offline development and prototyping.

With Fathom, Myriad 2's RISC CPU cores (along with its vector processors) find use in implementing TensorFlow's embedded "online mode" option. By subdividing the overall TensorFlow processing between the Neural Compute Stick and the host it's connected to (such as one of the ARM-based Raspberry Pi family of boards), you're able to add real-time processing of a neural network model to any existing TensorFlow-supportive CPU and associated platform. The ultimate intention is for the Myriad 2 SoC and related memory and other circuitry to be embedded within a drone or other end product. But the Fathom acceleration peripheral approach allows for relatively straightforward deep learning prototyping using an existing USB-based design.

According to Gary Brown, Vice President of Marketing at Movidius, the Fathom platform is applicable to a broad variety of pattern matching and deep learning functions, such as speech recognition. However, computer vision remains the company's focus. This focus is reflected in the fact that Myriad 2 is tailored for such applications by virtue of its embedded imaging accelerators, for example, along with its multiple integrated MIPI CSI camera interfaces. And, as other industry observers have recently noted, computer vision applications are particularly performance-intensive and amenable to acceleration.

The Fathom Software Framework and Neural Compute Stick were publicly shown for the first time at the Embedded Vision Summit held earlier this month (Video 2). According to Movidius, initial Neural Compute Stick samples are now available for Tier 1 customers and deep learning researchers, with broader availability slated for later this year. General pricing has yet to be finalized, but Brown indicated in a recent briefing that it would likely be less than $200, and subsequent press coverage suggests an even more aggressive sub-$100 price target.

Video 2. The early-May 2016 Embedded Vision Summit marked the first public showing of the Fathom platform.

Add new comment